Beaver dam, Hesse, Germany.

Beaver dam, Hesse, Germany.The ecologist Paul Colinvaux, author of the classic text

Why Big Fierce Animals Are Rare, made an observation in his

deeply flawed book

The Fates of Nations that is very useful for modelling human social dynamics:

Unlike other animals, we can change our social habits to fit ourselves for new niches … P.42

A niche being:

… all the things the things about a kind of animal the let it live: its way of feeding, what it does to avoid enemies, how it is fitted to the place it must dwell. P.19

How, as he says elsewhere in the book, a species fits into the web of life.

For instance, any theory of

elite over-production is a niche theory, being based on the social dynamics of more people seriously aspiring to occupy an elite niche in a society than there are such niches to be filled.

Within the biosphere, species typically have a specific niche that they fill. Even if they are a differentiated species, such as ants and termites, their form still dictates, along with interaction with other species and the world around them, the niche they occupy in the web of life.

That interaction with other organisms and the environment can involve a certain amount of

niche construction through impacts their actions have on the environment around them and on other species. Such niche construction can leave an ecological inheritance to their descendants. It can potentially increase the number of niches available for the species, or reduce the variability (and so increase the predictability) of the niche. Such niche construction provides, as part of the purposive (i.e. goal-directed) behaviour of living organisms, an ordering principle within the biosphere.

The population of a species is set by the number of available niches for that species. (Hence, big, fierce animals are indeed rare.) The contest within species is to occupy one of those niches and reproduce more future occupants of those niches. Genetic lineages that successfully do so get to continue and those that don’t disappear. Different species (i.e. different sets of genetic lineages) compete to occupy and sustain niches within the nutrition and reproductive possibilities of the eco-system around them.

All currently existing genetic lineages have genetic ancestries that are much older than their current species. A genetic lineage, in the process of evolution and replication, can pass through existing as, and within, many different species.

Processes of adaptation can be expected to have some sort of search process inherent in them (as evolutionary biologist Bret Weinstein has suggested) for ability to search out survival and replication possibilities increases the chances of continuing genetic replication. Replication being the game that genes play. One played via selection for or against traits within subsistence and reproduction strategies. (All one needs for a game, in

an analytical sense, is feedback and response in the context of limited resources where some outcome, in this case staying in the game, is a "win": intent is not necessary.)

Such search processes for successful replication possibilities, which includes niche construction, enables the biosphere to have the level of order it does. For random mutation is nowhere near enough to explain the observed level of order in the biosphere, even within geologic time frames. This is especially so given the periodic mass extinction events and the explosions in new species that follow them. It is natural selection acting on strategies, particularly with the genetic recombinations of sexual reproduction, that provide much more opportunities for search-and-discovery of new opportunities.

A nice example of the interaction between search, niche, niche construction and genetic evolution is provided by the development of

lactase persistence in humans. If pastoralists can evolve the capacity to continue to consume milk after weaning, that greatly increases (by

around fivefold) the calories they can harvest from a given area of grasslands, dramatically increasing the number of sustainable pastoralist niches. This has happened more than once in human history, with

at least four separate versions of such genetic mutation occurring in different pastoralist populations and subsequently rapidly spreading through such populations.

The most widespread such mutation being that which developed among Proto-(or at least every early)

Indo-Europeans. Indeed, their particular mutation provides an excellent genetic marker of their pastoralism and the extent of their spread. A spread which, in the case of the Indo-European pastoralists, was almost certainly sustained over such as a breadth of time and space precisely because lactase persistence gave them a biological advantage over other populations that could only be gained by other populations through interbreeding with the Indo-Europeans.

Trade-offs ruleA niche always involves a series of trade-offs. Trade-offs both from pursuing the internal competition for available niches and for sustaining niches. The trade-offs a particular niche involves develop interactively with the energy-and-nutrient possibilities, and threat profiles, the niche occupant has to deal with to sustain itself and reproduce.

What makes

Homo sapiens so ecologically distinctive is the extent of our ability to choose new trade-offs, to shift across trade-offs, and so to adapt to, and create, new niches. An ability intimately interwoven with us being the technological ape, the toolmaking ape.

There is no single human niche. There is, for instance, no single forager niche. As can be seen by comparing, say,

the Inuit with

the Hadza. The human capacity to change interactions with the surrounding environment (and each other) so as to create new niches, even within the nomadic foraging pattern, is how we became the global ape.

Our varied human niches are created and sustained by our cognitive capacity for learning and discovery and our cultural transmission of information and skills. Our niche construction is a manifestation of us being so much the cultural species. Human technologies are sustained via our cultures which in turn are profoundly affected by our technologies.

We

Homo sapiens are the niche-creating, indeed niche-multiplying, species. We can adapt to, and create, new niches. Doing so either in addition to, or replacing by, existing niches. Indeed, we can create and occupy multiple niches across and within human societies.

Hence we have not only spread across the planet, becoming the global ape, but have also increased hugely in population. If you can create new niches, you can create new ecological spaces to occupy, with new resources to use.

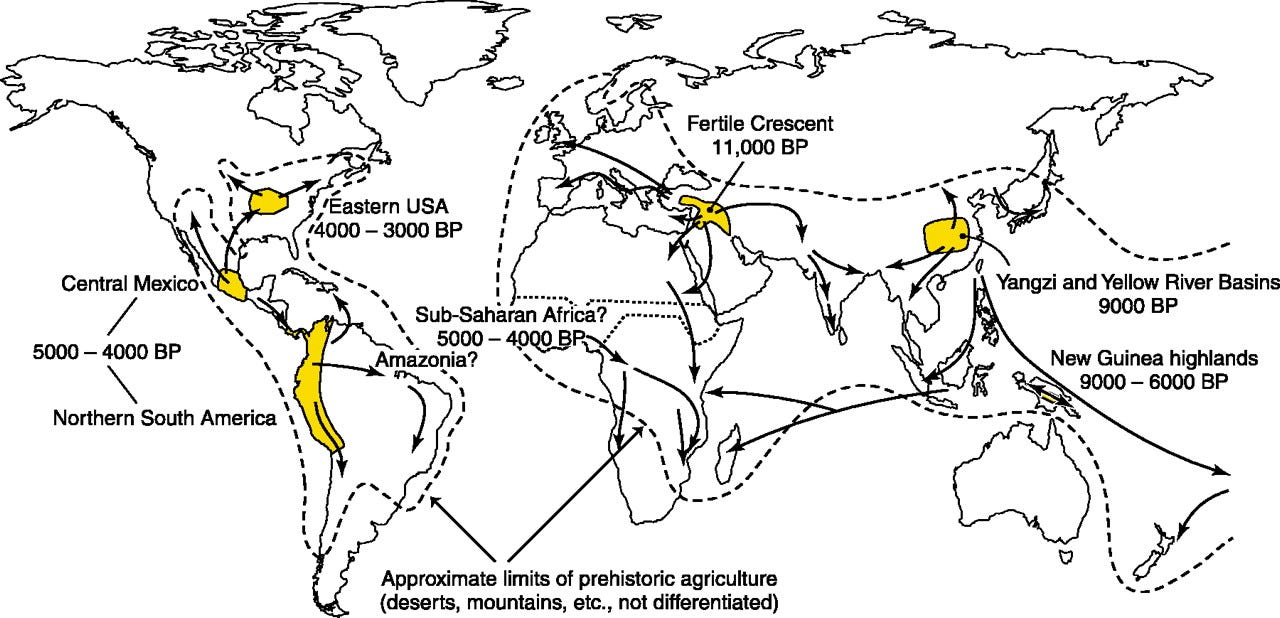

The human nomadic-forager niche was already more varied than the niches of any other species. Taking up sedentism added a new level of variation. Taking up farming and pastoralism extended that variation even further. As did creating chiefdoms and states. Industrialisation — the Great Enrichment — then increased the number and variety of human niches by further orders of magnitude. It also increased the fluidity of human niches, something information technology has ratcheted up further.

Human nichesMalthusian models, models developed from the population-dynamics insights of the

Rev. Thomas Malthus (1766–1834), are models of the limits to population given available resources, including available technology. Niches are the analytical mechanism connecting population size to available resources. Malthusian models should therefore incorporate the insight of ecology that it is available niches that set the limit to a population. Hence Malthusian models should be based on niches.

If this is not done, if people, rather than niches, are the unit of Malthusian models, it becomes much more difficult to deal with trade-offs within niches. Indeed, it becomes easy to operate such models in ways that are blind to such trade-offs.

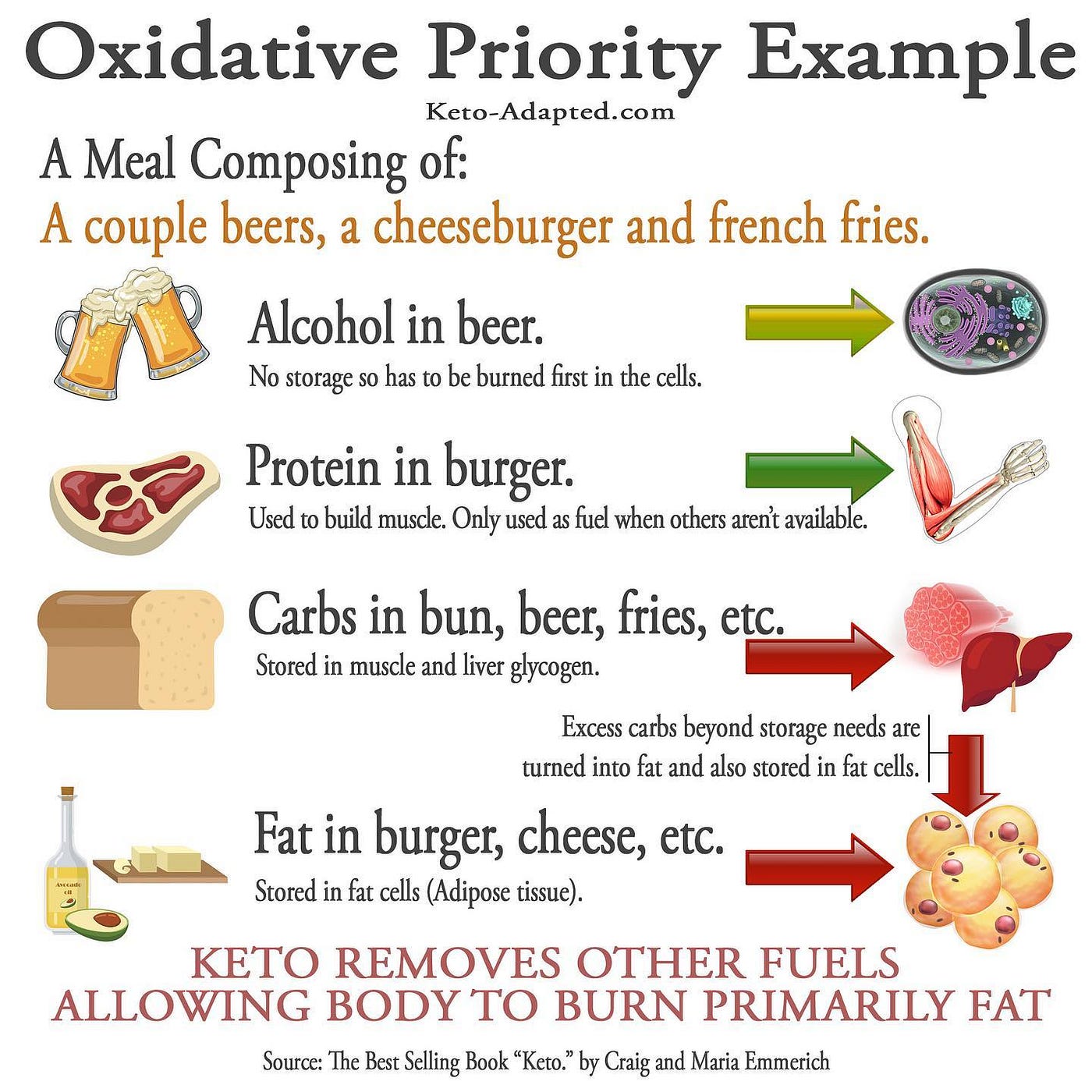

Conversely, switching to an ecological analysis, making niches the basic unit of the model, makes it possible to consider trade-offs within niches. For there will clearly be a range of trade-offs that are possible within niches that can yet remain viable such that occupants are able to reproduce. For instance, trading off more ready access to energy (calories) for lower long-term access to nutrients.

This ability to shift trade-offs is not only compatible with

Homo sapiens having the most biologically expensive children in the biosphere, it is a product of the cooperative cognitive complexity that those long childhoods evolve to sustain.

Quality versus quantityOne of the possible trade-offs within human niches is between quantity of offspring and quality of offspring. The more skills are needed to successfully occupy the targeted niche, the more likely parents are to shift towards quality of offspring over quantity. Conversely, the less skills and learning are needed, the more likely parents are to shift towards quantity of offspring over quality.

Farming is a lower-skill niche than foraging, given that it greatly reduces search costs and concentrates on a very small number of species. Conversely, the productivity of foragers

typically peaks around 45 years of age and foraging children typically don’t break even on calorie collection and consumption until they are almost 20. This is why mixed foraging-planting niches could be sustained by sedentary foragers, as they were, for millennia: there was very limited extra skill burden involved in planting some edible plant for later harvesting.

The lower skill burden is also part of how farming lowered the cost of children. Farming (and plant and animal domestication generally) required less time training children, who could become more productive earlier, while sedentary living meant that children were more able to look after each other. The easy (after processing the harvested plants) access to calories from domesticated plants also permitted earlier weaning of infants and so increased fertility, enabling the quantity/quality trade-off to be made. (The energy/nutrient trade-off involved, resulting in worse health outcomes, emphasises that this was a quality/quantity trade-off.)

Connection, pooling and exchangeAs intensely social beings, whose survival and reproductive success depends fundamentally on cooperation, humans manage and sustain their niches through processes of pooling (sharing), connection and exchange. With

pooling being: the use of common resources and

connection being: a continuing series of mutually acknowledged interactions.

We are the only species that regularly displays the behaviour of engaging in “truck, barter, and exchange one thing for another”. While whether Adam Smith was right to call this a propensity or not

can be disputed, it is certainly a distinctive, and recurring, human pattern.

A pattern that occurs because we are so much the normative species, a crucial element in us being the cultural species

par excellence. It is not that there is no culture at all in other species, nor anything that might reasonably be called normative behaviour. It is just that we display both at a rate orders of magnitude

greater than other species. Just as, and not coincidentally, our tool making and use is orders of magnitude greater.

Exchange involves the exchange of property: what was yours becomes mine, what is mine becomes yours. The crucial idea in property not being

mine!, any silverback gorilla with a harem can do that, but

yours!, the acknowledgment of possession by others and associated rules of rightful transfer from one owner to another. Which is normative.

The need to defend our social space, plus the information associations an owned thing can have, generates an

endowment effect (valuing something we own over an identical thing that we do not). The effect on exchange behaviour tends to diminish with

market experience in trading such things, as distinct from

merely being an experienced trader, for the more the owned thing then becomes something to be traded (i.e., transferred) rather than distinctively ours.

A chimpanzee in a behavioural lab confirms

more strongly to the predictions of game theory — i.e. conforms more to the predictions of

Homo economicus — than humans do because we

Homo sapiens are far more normative than are

Pan troglodytes. That far greater normative capacity is part of us being the cultural species and fairly clearly arose out of our highly cooperative subsistence and reproduction strategies.

Being so much cultural species, including being able to marshal exchange as part of socially and technologically constructing new niches, is what has made us the global ape. The discoveries of the anthropogenic sciences

undermine both the

cultural hegemony model used by many sociologists and anthropologists and the

rational self-interest model used by economists and political scientists.

Human history is one of the social and technological construction of niches via the mechanisms of pooling, connection and exchange. For instance, shifting from nomadic foraging to sedentary foraging, and especially to sedentary farming, changes the dominant structure for pooling production and consumption from the multi-family band of shifting membership to (a typically) single-family household with much more stable membership.

Social exchanges are exchanges in the context of connection; so in the context of a continuing series of mutually acknowledged interactions. Commercial exchanges are exchanges that, if they continue across time, include managing connection(s), but are otherwise discrete events involving transfers of resources via goods or services.

Social exchanges are therefore dominated by the norms and expectations of connection. It would be an insult to offer to pay a friend or relative for a meal they have cooked for you.

As commercial exchanges are exchanges where any connection arises from within the context of exchange, they are dominated by the norms and expectations of trading and commerce. It would be theft or fraud to not pay for a commercially-provided meal. (There is a useful discussion of the difference between social and market exchanges in Chapter 4 of Dan Ariely’s book

Predictably Irrational.)

Any pooling in the case of social exchanges is based on the pre-existing (or sought) connections. Pooling in the case of commercial exchange results from the exchange itself.

As social exchanges are based on the norms of connection, the level of mutual regard inherent in the social context of the path of interaction typically involve considerable density of information. Commercial exchanges are based on commercial norms that typically involve much lower levels of information, outside the exchange itself and associated patterns of exchange. This allows commercial exchanges to scale up much more than can relying on connection and local pooling. Hence such exchange can expand the size and number of human niches.

Economising on information is much of the advantage of commercial exchange. Sufficiently dense patterns of exchange result in the development of

exchange goods: goods held so as to be able to participate in future exchanges. At some point, a

medium of account (i.e. full money) may develop, due to its value in economising on information: notably search, negotiation and accounting costs. (A

medium of account being something used to both quantify and discharge obligations.) Increasing the scaling-up effect on the size and number of human niches of commercial exchange.

Gifts and favours are investments in connection. An appropriate gift can express the strength of a connection by demonstrating how accurately the giver of the gift “sees” the other person and how important their connection is to the giver. A public gift makes a public display of these things.

In societies with very little exchange, but very dense webs of connection, failure to be able to sustain the pattern of gifting that maintains connections can drive individuals into “gift bankruptcy” and so a form of debt-bondage. Just as with commercial bankruptcy, it represents a terminal inability to meet one's obligations.

Niche size and well-beingMalthusian models for pre-industrial societies that use people as the unit of analysis imply, due to using the person as the unit being modelled rather than the niche, that human well-being will tend to return to a recurring steady-state, as increased resources are eventually matched by increased population. This makes it difficult for Malthusian models using people as the unit of analysis to conform to the strong evidence that farming

was less healthy than foraging. But, if the models focus on niche-size instead of human well-being, then different internal trade-offs within niches can be incorporated within the model, even if niche size tends to return to a recurring steady-state for a given level of technology.

As mentioned above, a possible such trade-off is to have more readily available energy but less nutrients, resulting in smaller stature and worse health. As long as the trade-off does not get in the way of successful reproduction, it can be a socially viable trade-off. Indeed, if accepting the trade-off results in increased capacity to generate such niches for your descendants to occupy, it will become a successful, even dominant, trade-off.

Trade-offs between niche size (so niche quality) and niche number (niche quantity) can occur in various forms. Where you are in the spectrum of control of resources determines the consequences of different decisions in intergenerational transfer of assets. Thus, single-heir systems, such as primogeniture, are structured to maintain a certain niche size. Single-heir systems typically involve accepting that one’s other children end up with smaller social niches.

Elite over-production can be de-stabilising for societies precisely because more people are seeking (and having the resources to) compete for elite niches than there are elite niches to sustain them. Such competition, if of sufficient intensity, can be highly destructive to the normative order of a society.

Elite over-production by polygynous hereditary elites is likely to have been a major cause of the transience of steppe empires, for example. Given that the herding productivity of grasslands was an enduring constraint on the number of pastoralist niches.

Some niches need more resources to be sustained and/or are sustained at a higher level of health. Sufficient increase in resources, such as the great enrichment that began with the application of steam-power to transport by the development of railways and steamships, can lead to increases in both quantity and quality of niches and, if the cost of children rises sufficiently, to lower fertility.*

As farming, due to its elimination of most search costs and concentration on a far narrower range of food species, required less skill than foraging, so could be contributed to more with a lower level of skill (i.e. children were more productive earlier in life), there was no pressure to increase the quality of children, but there were likely benefits to having more children. Including more capacity to create kin connections through marriage and more of a buffer against ageing.

The first constraint in the construction of human niches is time. There are only certain amount of hours in a day. The second constraint is sustenance, the need for a certain amount of energy and nutrients to sustain oneself. Energy is more immediately urgent than nutrients, so it is possible to make a choice that provides sufficient energy but involves some deficiency in nutrients. This will have future health implications, but, as noted above, this may not block the continuation and replication of the niche.

If the niche is going to be replicated in the next generation, then time and sustenance has to allocated to reproduction and training. At the core of

Homo sapiens being the cultural species is direct or indirect investment in the training of offspring.

Hence the potential trade-off here between quality of offspring and quantity of offspring. As we have seen, it is entirely possible to have a niche that reduces the cost of children, for instance making it easier to feed them and making it less likely to lose them in early infancy, yet also means that their long-term health is poorer. Ironically, a higher rate of infant mortality in a situation of restricted fecundity (due to long weaning periods, for example) may make for more investment in the quality of children. Particularly if there is more nutrient-rich food available to feed them.

One of the changes industrialisation created was to markedly increase the returns to education while reducing the ability of children to contribute to household production. Improved sanitation and increased medical knowledge also markedly reduced infant mortality. This resulted in a dramatic shift away from quantity of children towards quality of children, with large falls in fertility rates.

Thus, the foraging-to-farming shift from quality to quantity of children has been more than reversed. But at, of course, hugely higher population levels.**

Niche-creating speciesHumans are members of a species that technologically creates a variety of niches by intensely social and cultural processes. Models of human populations need to be able to incorporate the reality of the creation of varied niches. Assuming a single human niche certainly hugely simplifies modelling, but the analytical accuracy cost in usefully analysing human social dynamics by doing so can become very high, very quickly. Similarly with attempting to model human population dynamics without being able to incorporate the varying trade-offs of human niches.

Thinking of humans as the niche-creating species, and as niches as involving various trade-offs, clarifies human social dynamics.

Beaver dam, Hesse, Germany.

Beaver dam, Hesse, Germany.