The inability to see oneself is a core element of much modern moralising

We live in a time of a striking pattern. In many ways, societies are much more socially egalitarian than they have ever been. Openly class-based social superiority language is much, much rarer than it used to be.

Conversely, we live in an age of intense moral in-egalitarianism. For instance, academic commentary on Trump voters has predominantly been about voting for Trump as a sign of moral delinquency. As has much of the academic commentary on Brexit voters. Such moral elitism is far more common than any explicit class elitism.

There is, of course, very much an underlying social dimension to this moralised denigration, but it pertains to a class that cannot see itself. Specifically, the possessors of human-and-cultural capital. (Human capital being skills, habits, knowledge and other personal characteristics that affect our productivity.)

The human-and-cultural capital class do not, and in a sense cannot, preen as a class because they very determinedly do not see themselves as a class. They are a meritocratic elite, so obviously not a class. Hence the socially egalitarian language.

If one was to nominate the single biggest difference between contemporary “woke” progressivism and previous iterations of progressivism, it would be the disappearance of class as an object of social concern.

A rather telling indicator of this is that it makes sense to talk of “woke capital”, but woke workers are not a thing. Indeed, if “woke” cultural politics become sufficiently salient in electoral politics, working-class voters become much more likely to vote in dissenting, anti-woke ways.

Nevertheless, modern “woke” progressivism is very much driven by class politics. The class politics of status rather more than the class politics of economic interest. But that is not surprising. It is actually hard for any class to combine on the matter of economic interests, as the biggest competitors for one’s income are people seeking the same sort of income. It is much easier for a class to combine on the matter of class status, for that is a benefit they can, and do, share. Status as a member of a group is non-rivalrous (at least over some range) within the group, though it may be highly rivalrous with respect to other groups.

(In terms of political dynamics, income and benefits from the state that are not dependent on working for the state are, obviously, in a bit of a different category to ordinary economic interest. The more benefits the state hands out, the stronger the incentives to act collectively to increase benefits received and minimise costs paid. Though the weaker become the incentives to act cooperatively to independently create positive social returns.)

Unwillingness to see

There are two levels of self-deception going on in the class politics of “woke” progressivism. The first is the inability to see themselves as a class. The second is the inability to see the mimetic nature of their moralising. (The latter term may be a little mysterious: I will explain presently — mimetic desire is desire copied or imitated from others.)

The inability to see themselves as a class is straightforward, though it goes beyond seeing themselves as a meritocratic elite, and so not a class. “Woke” progressivism is overwhelmingly concentrated in the human-and-cultural capital class. The most “woke” industries are the ones most dominated by the possessors of human-and-cultural capital: entertainment, education, news media and online IT. The industries that constitute the cultural commanding heights of contemporary society.

Marxism is by far the most elaborate schematics of class available to progressive thought. Human capital is not a form of capital that Marxism grapples much with, the concept not being developed in detail until decades after Marx’s death, even if the concept dates dates back to Adam Smith. The human-and-cultural capital class thus becomes effectively invisible as a class, both analytically and, given their sense of themselves as a meritocracy, in self-identity.

Conversely they typically very much see themselves as a moral elite, a moral meritocracy: that they possess the correct, and highly moral, understanding of the world. The sense of being members of a cognitive meritocracy (and elite) converges with a sense of being members of a moral meritocracy (and elite). This is, to invoke a touch of Rene Girard, mimetic moralising: mimetic desire being desires copied from another. They copy moral postures from each other based on a common desire to be, and to be seen to be, members of the moral meritocracy.

Unlike other forms of mimetic desire, mimetic moralising is not inherently rivalrous. On the contrary, moral agreement creates a mutually reinforcing sense of moral status.

This common desire to have a mutually self-reinforcing sense of moral status, not merely cognitive status but also moral status, creates a powerful tendency towards conformity. Specifically, it creates prestige opinions, opinions that mark one as a good, informed person. Opinions that make one a member of the club of the properly, intelligently, moral.

Expansive stigmatisation

The immediate corollary of this is that contradictory opinions become evil, wicked, ignorant, stupid. The opinions that are possessed by those, who by having those opinions, are outside the club of the properly, intelligently moral. Those who do not have moral merit in their opinions, who are not members of the moral meritocracy.

For opinions can only create prestige if contradicting them has negative prestige. Thus, dissent from these morally prestigious opinions cannot be legitimate, because if dissent is legitimate then there is nothing special about the putative prestige opinions. Opinions that it is legitimate to disagree with do not sort the morally meritorious from those not morally meritorious. Thus, they are not boundary-setting opinions that mark membership of the club of the morally meritorious.

Mimetic moralising thus insists on the right to police legitimacy, to police what is seen as morally acceptable. It turns morality into the property of the mimetic elite, who are deemed to have the right to police the public space.

It also makes the set of prestige opinions something of a moveable feast. The key thing is to stay inside the moral club, not to protect some permanent doctrinal purity. On the contrary, being alert enough to keep up with shifts in the prestige opinions, and shifting linguistic taboos, is part of how one proves and maintains membership of the moral meritocracy. With the necessary linguistic sensitivity helping to further sort those who are morally meritorious from those who are not.

The level of linguistic attentiveness required does much to ensure that working-class folk never quite make it into the morally meritorious. Particularly if they express themselves on various taboo-laden topics.

If mimetic moralising is the highest (status) good, then reason, evidence and consistency must be subordinated to it. Indeed, those who invoke reason, evidence and consistency against any of the prestige opinions are enemies of (mimetic) righteousness, because they fail to converge with the righteous opinions and they contest the mimetic elite’s ownership of morality. They thus proclaim their failure to join the club of the morally meritorious. Worse, they threaten the very gatekeeping distinctions that creates the status of being morally meritorious, that make club membership valuable.

Such stigmatisation of those outside the boundary of the morally meritorious has more power if it levers off things already widely accepted as being wrong or abhorrent. Thus, the accusation of racism! works so effectively, not because people are generally racist but precisely because they are generally not. The more racist society actually was, the less effect, the less negative resonance, the accusation would have. Conversely, the less racist society becomes, the more potential effect the accusation of racism has (with some adjustment for diminishing returns from over-use). This pattern is aided by the cognitive tendency to expand the ambit of a category or concept as the thing originally captured by the category or concept become rarer.

As this is a status strategy, the demand for grounds to stigmatise will be driven by the conveniences of club-gatekeeping rather than what is actually happening. Thus, the demand for racism and acts of racism as weapons of stigmatisation will (and does) tend to exceed, often quite significantly, the supply of actual racism and racist acts. There is thus a double inflation: acts that are not racist (or may not even have occurred) will be denounced, hence the startling high rate of hate-crime hoaxes. Meanwhile, actual acts of racism will be inflated in their significance.

There will also be an ongoing search for new grounds of stigmatisation to continue the separation of the morally meritorious from the not so. The multiplication of belief sins (all the -ist and -phobe accusations, those of cultural appropriation and so forth) is precisely what one would expect in a time of mimetic moralising as a status strategy by members of the human-and-cultural-capital class.

There is also an obvious capacity for purity spirals. And for more junior employees to leverage their moral commitment against more established staff less au fait with the linguistic and moral nuances. Or who retain lingering normative commitments outside the mimetic moraliising.

Display versus signal

It is useful to understand the difference between display in general and signalling specifically. In biology and economics, signalling involves the incurring of costs: the greater the cost incurred, the stronger the signal.

The mimetic moralising outlined above involves moral beliefs being on display but it rarely involves incurring any cost in such display. On the contrary, moralising as a status-game is all about the benefits of displaying one’s membership of the morally meritorious.

Even so, keeping up with shifts in linguistic taboos and prestige opinions does take attention, so does work as a signal. Not giving heed to the inconsistencies between, and hypocrisies within, the prestige opinions also works as a signal. Especially if it means wearing derision or critique from those pointing out such inconsistencies and hypocrisies. Thus, inconsistency and hypocrisy acts as more of a feature than a bug: it provides a signal of commitment to the club of the morally meritorious, a willingness to pay the membership dues.

Moral norms are norms held unconditionally: things people believe are morally right regardless of the expectations of others. Social norms are norms based on the expectations about what others will do, and what others expect people to do, that have associated social sanctions. Descriptive norms are norms simply based on expectations about what others will do and do not have associated sanctions. (This is the framework for norms developed by philosopher Christina Bicchieri.)

There is a perennial tendency to parade social norms as moral norms. Adhering to a moral norm, rather than a social norm, is a stronger signal of personal commitment and is more presumptively meritorious.

Parading social norms as moral norms allows use of the most complete language of normative commitment: indeed, the language of trumping normative commitment. It also permits a useful level of self-deception: I am not adopting this outlook because it is expected of me and there are costs if I do not, I am really morally committed to it. It becomes a stronger signal of moral in-group membership, of being soundly clubbable.

Mimetic moralising as a status strategy has a range of consequences. One is that it easily slides into a more general expectation of emotional and social protection. The claim that certain words being published make people feel unsafe makes more sense in this context. Being of the club of the morally meritorious easily generates a wider expectation of cognitive safety and protection, of not having to deal with dissenting ideas in one’s social (including work) milieu. The more one’s sense of identity and status is invested in a sense of being of the morally meritorious, the more that is likely to be the case. The more also one is likely to be willing to stigmatise, or otherwise sanction, those who dissent.

Another consequence is creeping organisational capture. The more people adhere to the mimetic moralising status strategy, the less willing they are likely to be to risk losing its protections and benefits by supporting dissenting voices or considering inconvenient facts or perspectives. Much of the power of mimetic moralising as a status strategy is precisely from mutual confirmation of moral righteousness, of being of the morally meritorious. But the elevation of such mimetic moralising then hollows out any use of contrary norms, even if they are longstanding norms of the organisations or institution. Indeed, particularly with the fading away of Christianity, the process of capture is likely to more effective the less competition there is within the normative space for contrary norms that people can acceptably invoke.

Unawareness required

The capacity for manipulation of all this by self-serving actors is very high. Nevertheless, in general, all this only works if the mimetic elite does not fully and consciously understand what they are doing. There is a real sense in which it is vital that they do not see themselves. For if they saw their attempt to control legitimacy, to possess morality as their property, to stigmatise disagreement, to protect their moralised sense of status, for what it was, it would stop working.

Prestige is a bottom-up status process, so if it is fully revealed, and accepted to have been so revealed, the mimetic prestige game would not grant moral prestige. The social dominance would become obviously raw self-interest. Their stigmatising exclusions, in all their projective exaggerations, transparently self-serving.

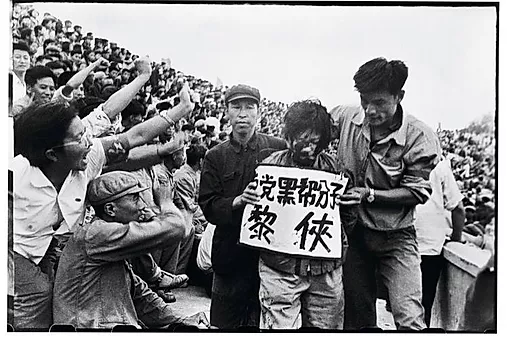

Conversely, if this mimetic moralising is the highest good, then mobbing is natural and inherent. Mobbing — that is, scapegoating stigmatisation — unites and protects the mimetic moralising, the shared sense of being a moral meritocracy. They are united in, and by, the stigmatisations that protect their sense of status, of being of the moral meritocracy.

What are the sins that they are stigmatising folk for? Typically, for failing to conform to righteous moral harmony, a society entirely without bigotry or ill-feeling. If some grand social harmony is insisted on, but does not yet exist in society, then someone must be to blame for the lack of harmony. Hence the scapegoating of those who disturb harmony is natural to the grand elevation of social harmony as the proper goal. Harmony being a much more all-pervasive and controlling ideal than mere order.

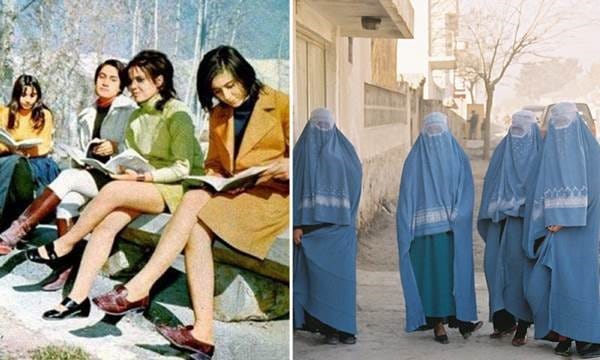

The more this wonderful harmony-to-be-created looks different from the morally-disorderly past, the more the past can be scapegoated. The past cannot answer back, after all. Unless there are scholars brave enough to stand against the mimetic moralising of their colleagues, and the stigmatisation that is likely to engender. (What was remarkable about the 1619 Project was not that so many of the morally meritorious rolled over for it, following the dynamics of mimetic moralising, but the number of historians who were willing to speak against it; in part due to more traditional leftists pushing back against identitarian progressivism. They had norms external to the mimetic moralising that they were willing to stand up for.)

Separating people from their past also separates them from norms and framings that might be invoked against the mimetic moralising.

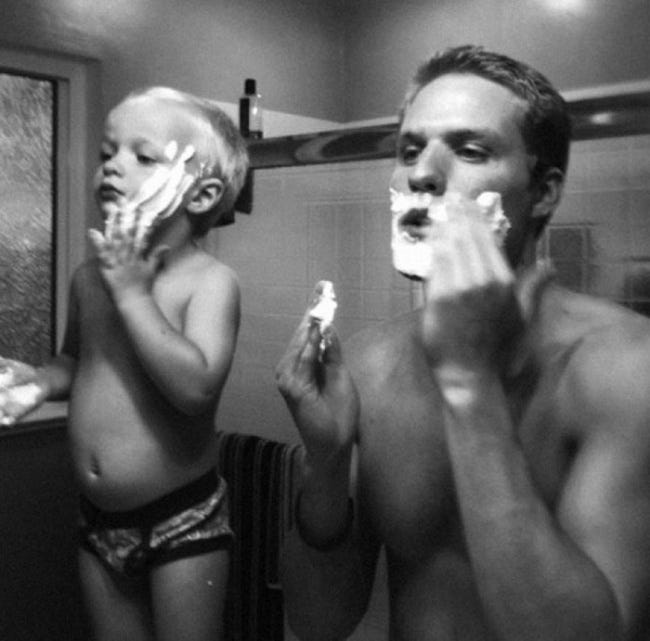

This scapegoating of the past leads to further self-deception. People whose moral postures are utterly conventional in their social circles (as being morally conventional, and so mutually meritorious, is precisely the point), who shift their moral postures to keep up with what is conventional, who are assiduously morally conventional within their social milieu, laughably claiming that they would not have adopted the moral postures that were conventional in the past.

This massive, and utterly self-serving, arrogance being the basis for their contemptuous rejection of the past which fails to live up to their lofty standards. Standards for which they make no sacrifices, beyond some (relatively minor) signalling costs. Indeed, which are all about them avoiding any sacrifice. Well, any sacrifice on their part.

Their mimetic moralising involves plenty of sacrificing of others to their sense of righteousness. Sacrificing the ability of outsiders to speak, to be heard; sacrificing reputations, jobs, careers. Lots of sacrifice imposed on others by the mimetic elite, none on themselves.

(This lack of sacrifice is why the notion of virtue signalling is somewhat problematic. There is precious little virtue in doing things that involve no sacrifice. Piety display is a better term for what goes on.)

But such sacrifice of others is how scapegoating and mimetic moralising works. Protecting the sense of status one finds congenial by loading guilt and rejection onto the objects of stigmatised sacrifice. Such an object of sacrifice is not a victim, but full of guilt, so deserves what comes to them as they are sacrificed to preserve the distinction between the morally meritorious versus the stigmatised, morally other who is thereby evil.

Thus, to return to where we started, we can see that the eclipse of class from the language of progressivism makes perfect sense. For modern progressivism is dominated by the class that cannot see itself by a class. It is dominated by mimetic moralising that cannot see its own self-serving conventionality. Nor its stigmatising scapegoating. All of which works by shared habits of self-deception and could not stand genuine self-awareness.

Welcome to the world of the class that cannot see itself attempting to achieve social dominance by policing legitimacy in the service of its mimetic-moralising status strategy. And doing so via the stigmatising scapegoating it imposes on others.

And no, for those wondering, online IT is not going to stop its stigmatising exclusions any time soon. There is far too much status at stake.

Cross-posted from Medium. Like most of my pieces on Medium, this is more of an ongoing meditation rather than a finished piece, so is subject to ongoing fiddling.